Engineering Manager OS: Master Team Output (–29% PR p50 in 4 weeks)

TL;DR: Outcome: cut pull request (PR) cycle-time median (p50) by 29% in 4 weeks. Action: run the “Manager OS” cadence (daily/weekly/monthly) and measure PR p50 weekly.

Context: New engineering managers often default to “more coding.” I ran a 4-week experiment to test whether small managerial habits—priorities, delegation, feedback, rhythm—could improve whole-team throughput more than an extra manager coding sprint.

Table of Contents

What I changed

- Daily discipline (Manager OS)

- Wrote Top 3 priorities each morning (only tasks I must do).

- Used delegation prompt: “What do you think we should do?” before giving answers.

- Delivered 1 micro-feedback per day (positive or constructive).

- Energy check: blocked 90 minutes for strategy during my peak hours.

- Weekly rhythm

- 1:1s using 3Q: How are you? What’s blocking you? Where do you want to grow?

- Team sync (≤30 min): Wins → Priorities → Risks (with named owners).

- Calendar audit (Fri): killed or delegated one recurring meeting weekly.

- Weekly reflection (15 min): one tweak committed for next week.

- Monthly system

- Team health pulse (anonymous, 3 questions).

- Role clarity: refreshed each person’s top 3 responsibilities.

- Decision log review: merged, archived, or escalated stale items.

- Definitions used (explicit)

- PR cycle time: open → first approval or merge, whichever comes first.

- p50 / p90: median and 90th percentile.

- WIP (work in progress): open issues/PRs assigned to a person.

- Planned work %: story points or items tagged “Planned” ÷ total completed.

- DORA: DevOps Research and Assessment; we reference Deployment Frequency and Lead Time for Changes but tracked PR cycle time as proxy.

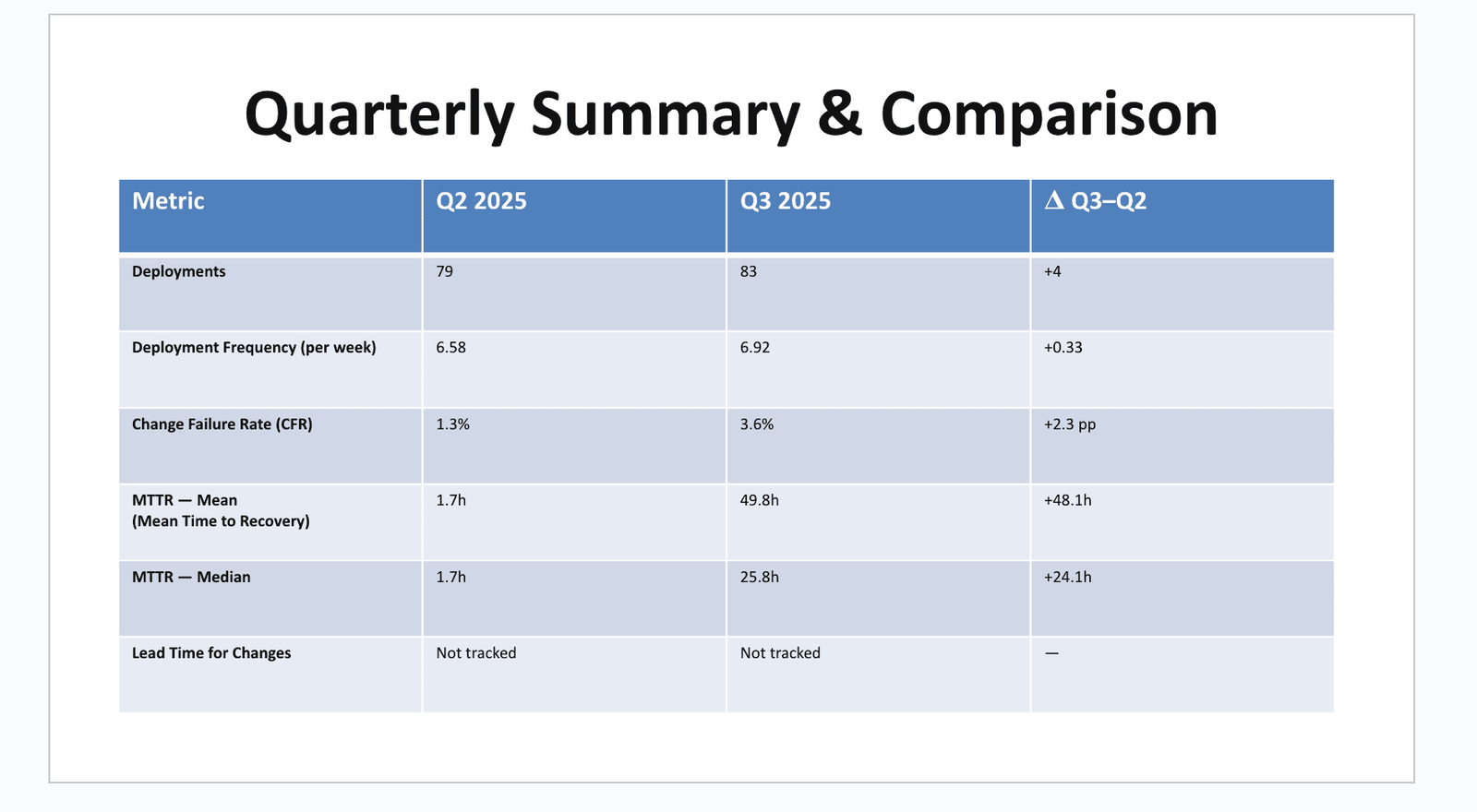

Baseline (4 weeks prior, n values shown)

Data window: 4 weeks, n=96 PRs, n=27 deploys.

| Metric | Definition (short) | Baseline | Notes |

|---|---|---|---|

| PR cycle time p50 | PR open → first approval/merge | 42 h | Skewed; p90=120 h; 6 outliers >7 days |

| Deployment frequency (median/wk) | Prod deploys per week | 5 | Total n=27 in 4 weeks |

| WIP per engineer (p50) | Open items per person | 3 | Range 1–7 |

| Planned work share | Planned ÷ total done | 60% | Unplanned interrupts high |

| 1:1 completion rate | Held ÷ scheduled | 67% | 6 cancellations |

| On-call interrupts (p50/day) | >5-min incidents per eng | 3 | p90=6 |

Observation: Skewed PR times demand medians; outliers were reviewer vacations and unclear ownership.

How to replicate Engineering Manager OS (one-week start)

- Instrument: Capture 4 weeks of PR cycle times, deploy counts, 1:1 completion, WIP, planned %. Use medians; list outliers with causes.

- Install daily loop: Top 3, delegation prompt, one micro-feedback, 90-min strategy block at your personal peak.

- Run 3Q 1:1s this week with every direct; record “blockers → owner → date due”.

- Team sync ≤30 min: Wins → Priorities → Risks (each risk has an owner and a next date).

- Calendar audit (Fri): remove or delegate exactly one recurring meeting ≥30 min.

- Role clarity refresh: write top 3 responsibilities per person; share in team doc.

- Decision log: list open decisions, assign a DRI (directly responsible individual), due date, and escalation path.

Paste-ready artifact (copy/print)

Engineering Manager OS – Weekly Checklist

Data window to monitor (rolling 4 weeks):

[ ] PR cycle time p50, p90; list outliers + causes

[ ] Deploys/week (median); Lead Time proxy if available

[ ] WIP per engineer (p50)

[ ] Planned work % vs unplanned

[ ] 1:1 completion rate

Daily:

[ ] Write Top 3 (only I can do)

[ ] Ask: “What do you think we should do?” before answering

[ ] Give 1 micro-feedback

[ ] 90-min strategy block during peak energy

Weekly:

[ ] 1:1s (3Q): How are you? Blocking? Growth?

[ ] Team sync ≤30 min: Wins → Priorities → Risks(owner)

[ ] Calendar audit: cut/delegate 1 recurring

[ ] Reflection 15 min: 1 tweak for next week

Monthly:

[ ] Team health pulse (3 Qs)

[ ] Role clarity: top 3 responsibilities/person

[ ] Decision log: close, escalate, or commit dates

Risks & gotchas

- Risk: Engineering Manager drifts back to coding to “help.”

Mitigation: Protect the 90-min strategy block; track % time on multiplication tasks vs coding. - Risk: Delegation stalls due to unclear ownership.

Mitigation: In syncs, every risk gets a named owner and next date; publish in the team doc. - Risk: PR outliers hide systemic issues (vacations, neglected reviews).

Mitigation: Track outliers explicitly with causes; add backup reviewers; rotate review ownership. - Risk: Meetings creep back.

Mitigation: Enforce weekly calendar audit with a visible count of meetings cut/delegated. - Risk: 1:1 cancellations reduce signal.

Mitigation: Set a 90% completion SLO (service level objective); reschedule within the same week.

Results after 4 weeks (n=104 PRs, n=31 deploys)

- PR cycle time p50: 42 h → 30 h (–29%); p90: 120 h → 88 h; outliers reduced from 6 to 2.

- Deployment frequency (median/wk): 5 → 6.

- WIP per engineer (p50): 3 → 2.

- Planned work %: 60% → 72%.

- 1:1 completion: 67% → 93%.

Primary drivers: clearer ownership, faster reviews via named backups, fewer interrupts, and less managerial context thrash.

Next (2025-11-04 target)

- Goal: PR cycle time p50 ≤ 24 h, p90 ≤ 72 h; 1:1 completion ≥ 90%; WIP p50 = 2; Planned work ≥ 75%.

- Actions: introduce “review SLAs” (next-business-day on PRs), expand backup reviewers, and pilot a 2-hour weekly “no-meeting build window” for the team.

Bottom line: A manager improving personal output by 10% loses to a team improving by 30%. The Engineering Manager OS shifts time from “doing” to “multiplying,” and the numbers move accordingly.